How are Clinical Decision Support Artifacts Tested Today?

Noam H. Arzt, Ph.D.In October 2018 the Centers for Disease Control and Prevention (CDC) issued a Request for Information (RFI) for a Natural Test Collaborative (NTC). Through a series of questions, the RFI seeks opinions and information about "The development of a national testbed (notionally called the National Test Collaborative (NTC)) for real-world testing of health information technology (IT)" and "Approaches for creating a sustainable infrastructure" to achieve it.

Noam H. Arzt, Ph.D.In October 2018 the Centers for Disease Control and Prevention (CDC) issued a Request for Information (RFI) for a Natural Test Collaborative (NTC). Through a series of questions, the RFI seeks opinions and information about "The development of a national testbed (notionally called the National Test Collaborative (NTC)) for real-world testing of health information technology (IT)" and "Approaches for creating a sustainable infrastructure" to achieve it.

The scope of this RFI is daunting. It might be useful, rather than to try to tackle this whole topic broadly but superficially, to take just one Clinical Decision Support (CDS) domain and show as completely as possible how testing is currently done. I'm going to choose a topic area that I work with a lot - clinical decision support for immunizations (see my comprehensive article on this topic for additional background). Sorry if this is a bit long, but part of the message is just how involved this can be for just one small area.

CDS for Immunizations serves as a good example for a number of reasons:

- Immunizations are a core public health and preventative medicine intervention.

- Clinical decision support for immunization is well understood, well documented, and implemented in a large variety of public (including Immunization Information Systems [IIS]) and private (including EHRs and Personal Health Records [PHR]) systems.

- Because of the maturity of CDS artifacts in this domain there are also mature testing tools already existing.

CDS for immunizations is usually based on clinical knowledge maintained by CDC and developed by a Federal advisory committee, the Advisory Committee on Immunization Practices (ACIP). The clinical knowledge is then transformed into logic artifacts that can be incorporated into CDS services for inclusion in larger systems that require the knowledge. IIS have been at the forefront of CDS for immunizations as it has been within their core functionality for over twenty years to provide evaluation of immunization histories and forecasting of future immunizations to support clinical care and public health surveillance.

To support this initiative, CDC led the development of a consensus logic specification (CDSi) based on the input of specialists in the field (HLN has participated from the start). CDC continues to maintain this specification, but it is not the only specification that exists. HLN's own open source Immunization Calculation Engine (ICE) project also provides documentation of its default schedule on a public wiki. Both the CDSi Logic Specification and the ICE Default Ruleset are interpretations of ACIP clinical guidelines which are similar but not exactly alike since ACIP guidelines are not written with computerization in mind. It is important to note that CDC has purposely declined to implement any specific software system using its CDSi Logic Specification but leaves it to others to use the documentation as they see fit.

To support this initiative, CDC led the development of a consensus logic specification (CDSi) based on the input of specialists in the field (HLN has participated from the start). CDC continues to maintain this specification, but it is not the only specification that exists. HLN's own open source Immunization Calculation Engine (ICE) project also provides documentation of its default schedule on a public wiki. Both the CDSi Logic Specification and the ICE Default Ruleset are interpretations of ACIP clinical guidelines which are similar but not exactly alike since ACIP guidelines are not written with computerization in mind. It is important to note that CDC has purposely declined to implement any specific software system using its CDSi Logic Specification but leaves it to others to use the documentation as they see fit.

Testing CDS for Immunizations

Testing immunization logic has some particularly interesting nuances. Unlike many other clinical domains, immunization recommendations change simply through the passage of time, meaning that the medical history for a patient can be completely unchanged but from one day to the next the recommendations for immunizations for that patient can be different (for example, an MMR is recommended on a child's first birthday but would not be recommended just a day before). This means that when creating test cases for these rules care must be taken to adjust the test case data to account for the passage of time: testing a record today that was created last week for a six-month-old child will not result in the same test as the child has now aged. Additionally, the rules related to one vaccine can affect the evaluation and recommendation of others, so even the most minor changes to the rules should involve full regression testing of the entire rule set to ensure there has been no inadvertent impact elsewhere.

One feature of the CDC CDSi Logic Specification is the inclusion of a detailed set of test cases meant to be used by software developers to test the correctness of their implementation of the logic. The test cases (now numbering more than 700) are distributed in structured Microsoft Excel and XML formats. A number of public health and commercial systems have implemented the CDSi Logic Specification and used the test cases to test their work. CDC has continued to maintain and alter the test cases as the Logic Specification has changed over time due to routine changes in the underlying clinical guidelines.

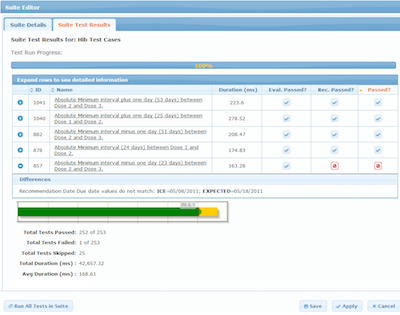

But other test harnesses exist as well. At HLN we have a test manager which is part of a more extensive CDS Administration Tool (CAT) which provides a user-friendly mechanism to test ICE rules and determine if the service is providing the correct results. We have over 2,700 test cases currently defined and we continue to modify and add test cases as the underlying rules change. The Test Manager is only used by our own analysts right now, but we certainly envision a time when other organizations using ICE might run their own tests with the Test Manager.

We know that others in the IIS community have testing strategies as well. One IIS developed by a public health agency and in use in nearly 20 jurisdictions has an elaborate Excel spreadsheet that can be used to generate test data for import into the IIS. Because the test data can be repeatedly modified and reloaded this provides some reasonable support for re-testing the rules when they change. Likely other IIS vendors have similar proprietary tools to support testing as well.

The American Immunization Registry Association (AIRA) also has a long tradition of thinking about issues related to immunization algorithm testing and has had several programs over the years to assess IIS adherence to ACIP clinical guidelines with a particular interest in promoting more uniformity in IIS performance across the country. This effort started with a small project to develop a tool to compare IIS algorithm results which grew out of an open source immunization algorithm project at Texas Children's Hospital. The test tool initially compared results from one or more IIS against the expected result that a user might indicate.

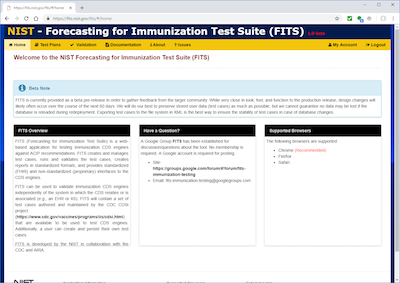

Around the same time, AIRA, CDC and the National Institute of Standards and Technology (NIST) began a project to create a more generic test tool called the Forecasting for Immunization Test Suite (FITS) that allows anyone to enter or import (in CDC CDSi test case format) test cases into the tool and execute the test cases against a CDS service. The user has the ability to point the test tool at a valid service end-point and can address any one of a half-dozen types of services, including HLN's ICE and other CDS systems popular in the IIS community.

But AIRA has not stopped there. It has begun a program to test and eventually validate the compliance of IIS with the CDC CDSi Logic Specification as part of its broader measurement and improvement strategy. AIRA has developed an Aggregate Analysis Reporting Tool (AART) which incorporates FITS and uses its ability to generate an HL7 version 2 query, the type required by IIS to support Meaningful Use, and interpret the immunization recommendations and forecast returned by the IIS in the response. While not all IIS are capable of this functionality, and there may be some limitations due to limitations in the HL7 query/response specification itself, this is an attempt to introduce some standardization into how at least IIS immunization CDS can be tested, based on a more generic tool that can be used by any system that uses one of the supported CDS services.

There is one additional effort worth mentioning. The Agency for Healthcare Research and Quality (AHRQ) is funding a project called CDS Connect. The goal of the project is to "demonstrate how evidence-based care can be more rapidly incorporated into clinical practice through interoperable decision support." Part of the focus is on promoting the use of techniques for CDS rule authoring that promotes interoperability, like HL7's Clinical Quality Language (CQL), and providing a mechanism to distribute CDS artifacts for re-use through an online repository (our ICE knowledge artifacts are in this repository now). But another part of the project centers around the development of an open source authoring tool that can be used to create and test CQL artifacts. While the tool does have limitations as it is in its early stages of development it may prove to be a useful resource for CQL-relevant CDS projects.

There is one additional effort worth mentioning. The Agency for Healthcare Research and Quality (AHRQ) is funding a project called CDS Connect. The goal of the project is to "demonstrate how evidence-based care can be more rapidly incorporated into clinical practice through interoperable decision support." Part of the focus is on promoting the use of techniques for CDS rule authoring that promotes interoperability, like HL7's Clinical Quality Language (CQL), and providing a mechanism to distribute CDS artifacts for re-use through an online repository (our ICE knowledge artifacts are in this repository now). But another part of the project centers around the development of an open source authoring tool that can be used to create and test CQL artifacts. While the tool does have limitations as it is in its early stages of development it may prove to be a useful resource for CQL-relevant CDS projects.

Conclusion

Folks are tired of hearing this, but in many ways it does come down to standards. Test tools like the ones described in this article can be generalized to work with different CDS artifacts within the same domain, or even different domains. But for that to work, the artifacts need to be expressed in a portable, standards-based language like CQL, and that language must be robust and feature-rich enough to allow the expression of both simple and complex rules. Emerging standards, like Object Management Group's (OMG) Decision Model and Notification (DMN) specification and shareable clinical pathways, and the tools that support it, provide another potential standard around which more uniformity and portability of CDS artifacts might be organized. But it will take more than that; the articulation of test cases themselves also needs to be standardized so that they, too, can be portable across systems and domains.

- Tags:

- ACIP clinical guidelines

- Advisory Committee on Immunization Practices (ACIP)

- Agency for Healthcare Research and Quality (AHRQ)

- Aggregate Analysis Reporting Tool (AART)

- American Immunization Registry Association (AIRA)

- CDS Administration Tool (CAT)

- CDS artifacts

- CDS for immunizations

- CDSi Logic Specification

- Centers for Disease Control and Prevention (CDC)

- clinical care

- Clinical Decision Support (CDS)

- Clinical Quality Language (CQL)

- consensus logic specification (CDSi)

- Decision Model and Notification (DMN)

- Forecasting for Immunization Test Suite (FITS)

- health information technology (HIT)

- HL7

- HLN Consulting

- ICE Default Ruleset

- ICE rules

- immunization algorithm testing

- Immunization Calculation Engine (ICE)

- Immunization Information System (IIS)

- immunizations

- interoperable decision support

- Meaningful Use

- National Institute of Standards and Technology (NIST)

- Natural Test Collaborative (NTC)

- Noam H. Arzt

- Object Management Group's (OMG)

- open source

- open source authoring tool

- open source ICE

- open source immunization algorithm project

- Personal Health Records (PHR)

- preventative medicine

- public health

- public health agency

- public health surveillance

- Request for Information (RFI)

- shareable clinical pathways

- sustainable infrastructure

- Texas Children's Hospital

- Login to post comments